Neural networks have revolutionized the field of artificial intelligence, enabling machines to learn from data in ways that mimic human cognitive processes. Understanding how these systems work is essential for anyone interested in modern AI development.

What Are Neural Networks?

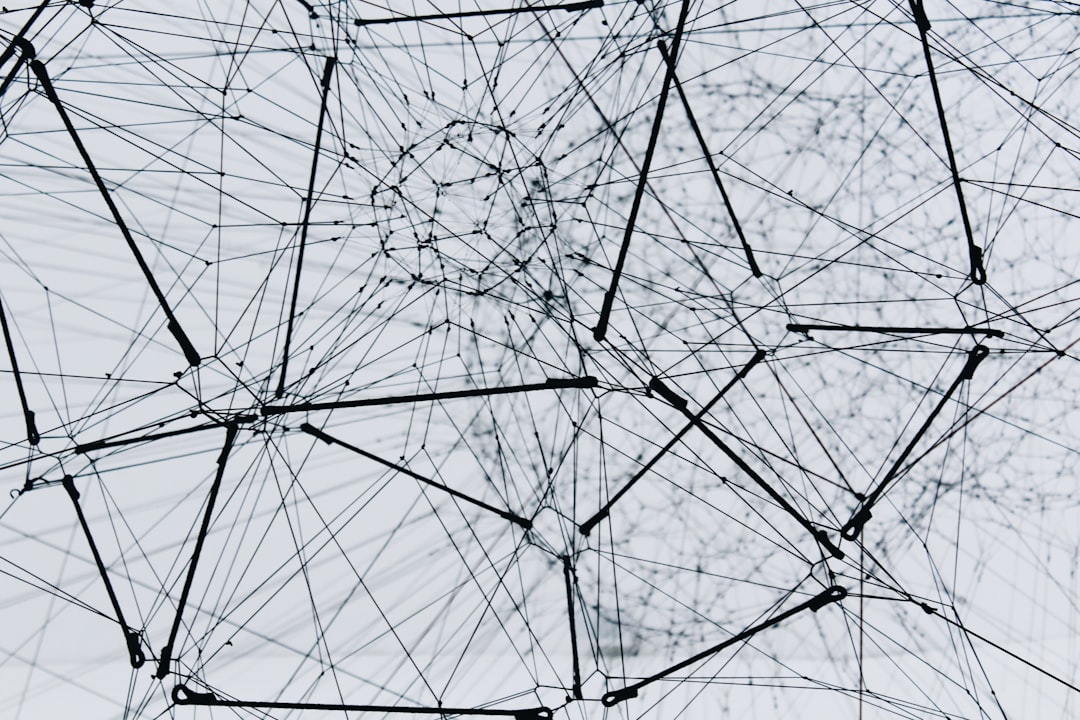

Neural networks are computational models inspired by the biological neural networks in animal brains. They consist of interconnected nodes, or neurons, organized in layers that process information through weighted connections. The fundamental principle behind neural networks is their ability to learn patterns from data through a process called training.

At their core, neural networks transform input data through multiple layers of mathematical operations, progressively extracting higher-level features until they can make predictions or classifications. This hierarchical approach to learning has proven remarkably effective across diverse applications, from image recognition to natural language processing.

The Architecture of Neural Networks

A typical neural network consists of three main types of layers: the input layer, hidden layers, and the output layer. The input layer receives raw data, which could be pixel values in an image, words in a sentence, or numerical features in a dataset. This data is then passed through one or more hidden layers, where the actual learning and feature extraction occur.

Each neuron in a hidden layer receives inputs from the previous layer, applies weights to these inputs, sums them together, adds a bias term, and then passes the result through an activation function. This activation function introduces non-linearity into the network, allowing it to learn complex patterns that linear models cannot capture.

From Perceptrons to Deep Learning

The journey of neural networks began with the perceptron, a simple single-layer neural network developed in the 1950s. While perceptrons could solve linearly separable problems, they had significant limitations. The introduction of multi-layer perceptrons and the backpropagation algorithm in the 1980s marked a major breakthrough, enabling networks to learn more complex functions.

The modern era of deep learning emerged in the 2010s, driven by increased computational power, larger datasets, and architectural innovations. Deep neural networks, with their many hidden layers, can automatically discover intricate patterns in data without manual feature engineering. This capability has led to dramatic improvements in tasks like computer vision, speech recognition, and language understanding.

Training Neural Networks

Training a neural network involves adjusting its weights and biases to minimize the difference between predicted outputs and actual targets. This process uses an optimization algorithm, typically gradient descent or one of its variants, combined with backpropagation to compute gradients efficiently.

During training, the network processes batches of data, makes predictions, calculates a loss function that measures prediction error, and then updates weights in the direction that reduces this loss. This iterative process continues for many epochs until the network achieves satisfactory performance on the training data.

Common Neural Network Architectures

Different problems require different network architectures. Convolutional Neural Networks excel at processing grid-like data such as images, using specialized layers that preserve spatial relationships. Recurrent Neural Networks are designed for sequential data, maintaining internal state to process time series or text.

More recent innovations include attention mechanisms and transformer architectures, which have achieved state-of-the-art results in natural language processing. These architectures use self-attention to weigh the importance of different parts of the input, enabling them to capture long-range dependencies more effectively than traditional recurrent networks.

Challenges and Considerations

Despite their power, neural networks face several challenges. Overfitting occurs when a network learns the training data too well, including its noise and peculiarities, resulting in poor generalization to new data. Regularization techniques like dropout, weight decay, and data augmentation help mitigate this issue.

Neural networks also require substantial computational resources and large amounts of training data. They can be difficult to interpret, often functioning as black boxes where understanding why a particular prediction was made is challenging. Research into explainable AI aims to address this limitation.

Practical Applications

The applications of neural networks are vast and growing. In computer vision, they power facial recognition systems, autonomous vehicles, and medical image analysis. In natural language processing, they enable machine translation, sentiment analysis, and conversational AI. They're also used in recommendation systems, fraud detection, and scientific research.

As neural networks continue to evolve, they're being applied to increasingly complex problems. From drug discovery to climate modeling, these systems are helping researchers and practitioners tackle challenges that were previously intractable.

The Future of Neural Networks

The field of neural networks is rapidly advancing. Researchers are developing more efficient architectures that require less data and computation. Techniques like transfer learning allow pre-trained networks to be adapted to new tasks with minimal additional training. Neural architecture search uses automated methods to discover optimal network designs for specific problems.

Understanding neural networks is increasingly important for anyone working with AI and machine learning. While the mathematics can be complex, the fundamental concepts are accessible, and numerous tools and frameworks make it easier than ever to build and deploy these powerful systems. As we continue to push the boundaries of what neural networks can achieve, their impact on technology and society will only grow.